AI Maturity Scorecard: a tool to measure GenAI adoption in business

Measure your company's tangible progress in AI initiatives and capabilities

Generative AI has been mainstream for more than two years. Most companies have several GenAI initiatives underway, driven by a core team of internal GenAI enthusiasts. However, from the CEO's perspective, it sometimes feels as though it's hard to know if these projects are moving the needle.

How do they compare with best practices? Are they being adopted by 2%, 20% or 80% of the organization?

The GenAI Maturity Scorecard

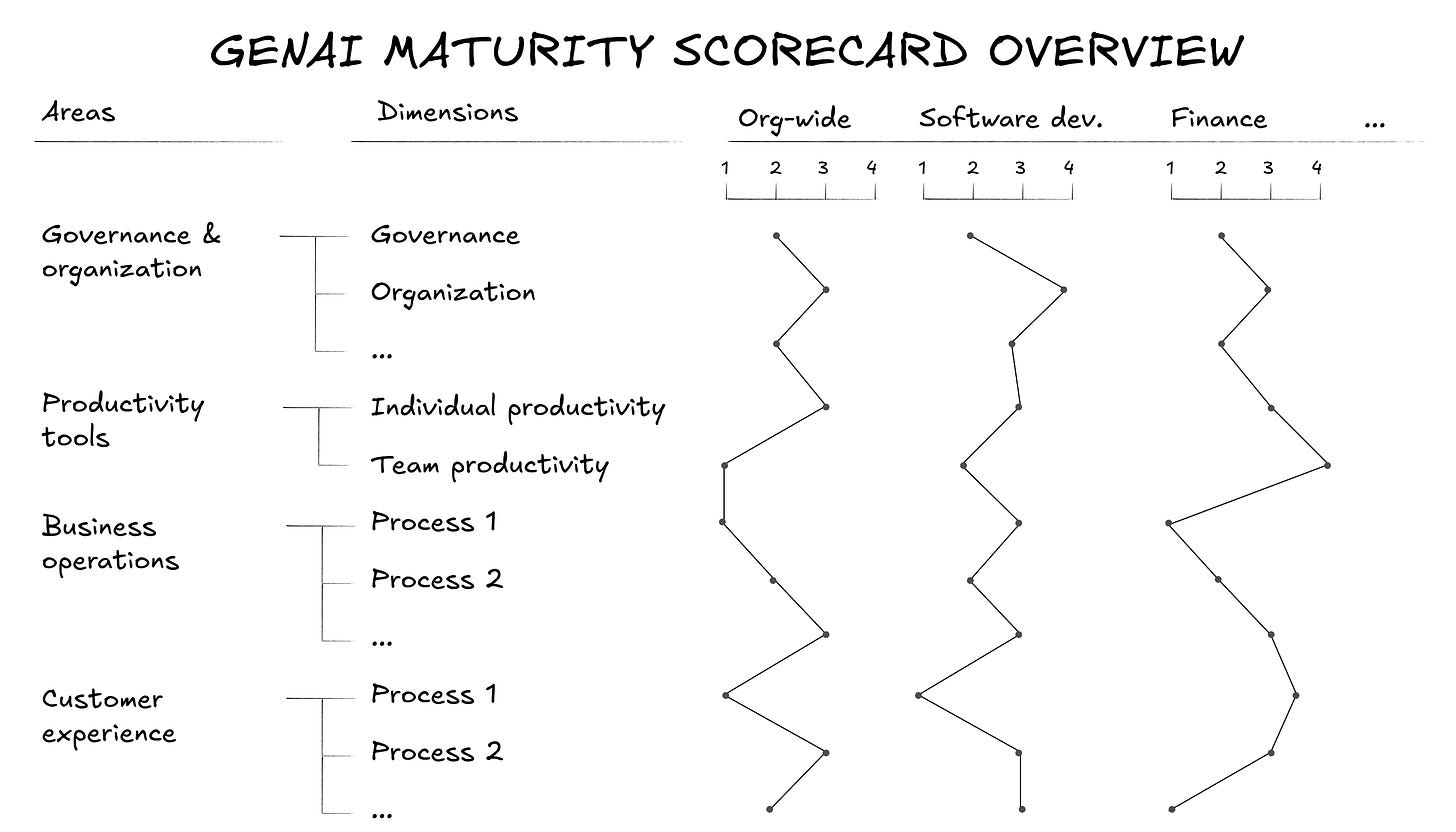

The GenAI Maturity Scorecard is a structured report card that measures the progress made by the organization as a whole, as well as each of its departments, in terms of GenAI adoption.

More importantly, the Scorecard can be updated over time, typically on a quarterly basis. This enables the CEO to access a bird's-eye view of the actual progress that has been made.

Typically, the Scorecard is structured around 4 areas:

Governance and organization. The dimensions associated with this area do not vary much by department. They include:

Governance

Organization

Infrastructure

Risk management

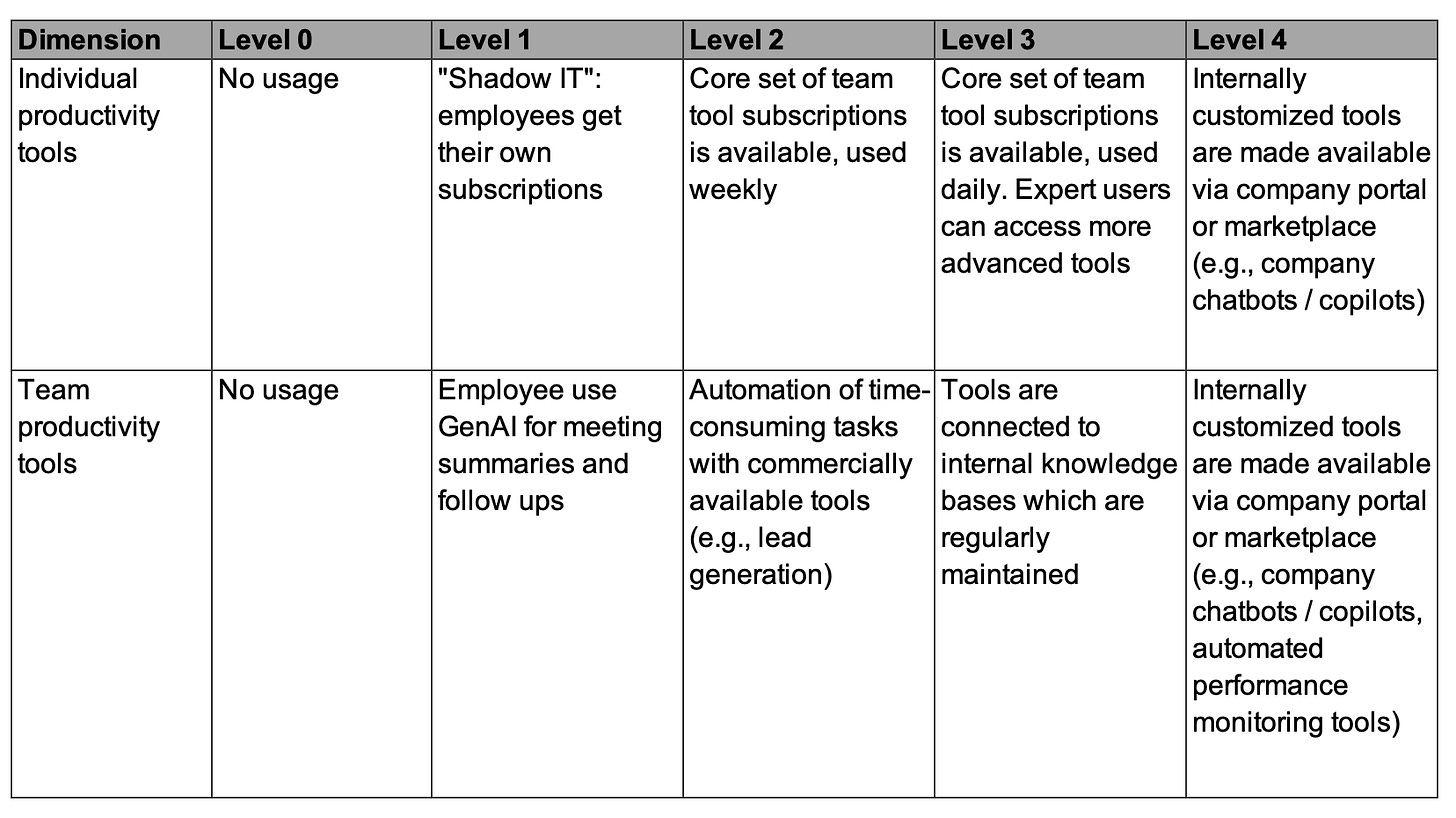

Productivity tools. The dimensions associated with this area do not vary much by department either. They include:

Individual productivity tools

Team productivity tools

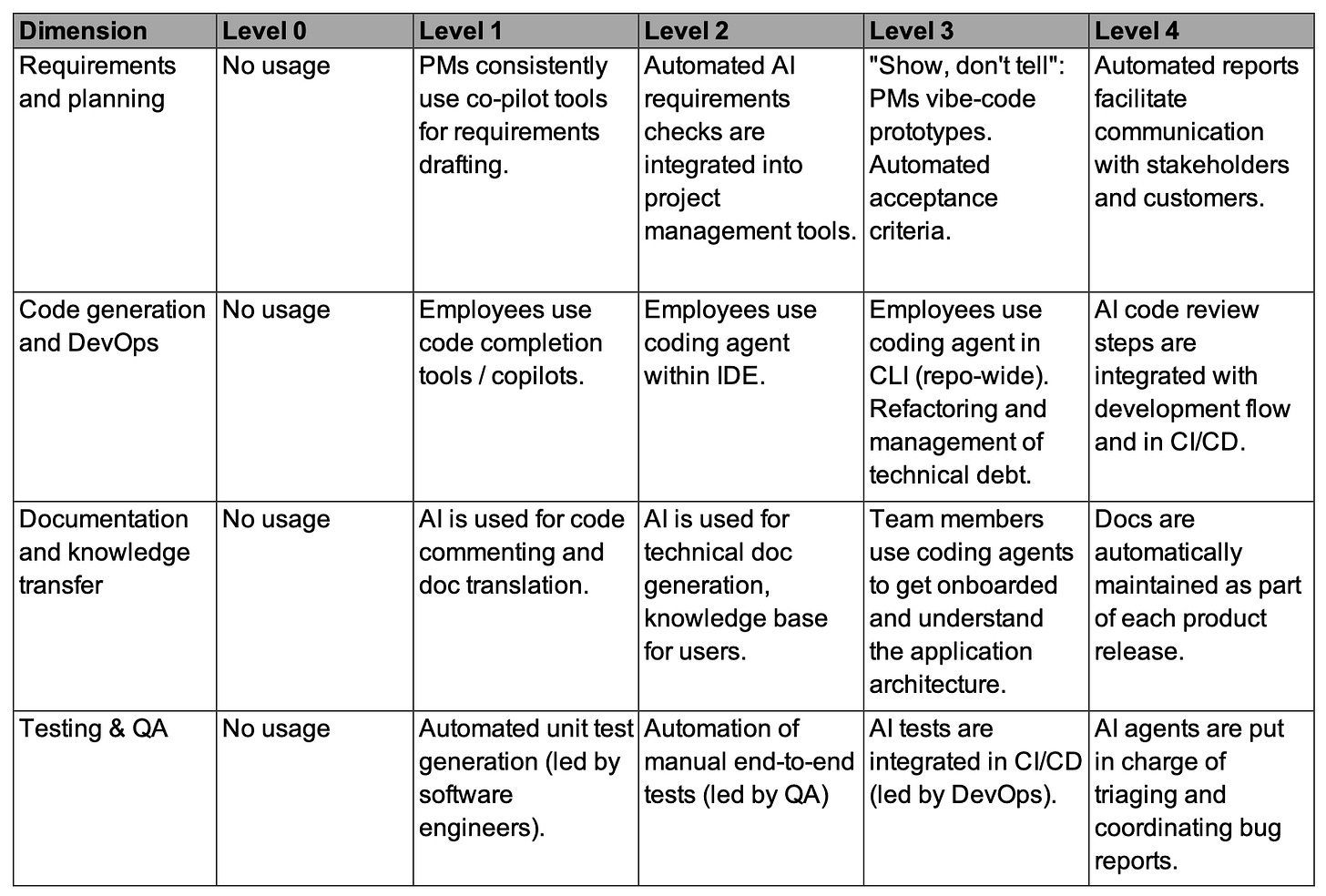

Business operations. This area focuses on the application of GenAI for internal process optimization and automation. Its dimensions vary by department. For example, for the software engineering department, the dimensions may include:

Requirements and planning

Code generation and DevOps

Documentation and knowledge transfer

Testing & QA

Customer experience. This area encompasses initiatives that impact customer onboarding, product delivery, and customer support. Its dimensions vary by business. For example, dimensions may include:

Customer onboarding

User interface

Customer success

Customer support

The Scorecard is not purely technical, since it does not focus solely on whether a specific GenAI use case is implemented, such as software development agents or customer chatbots. It is heavily dependent on the level of adoption of use cases across the organization.

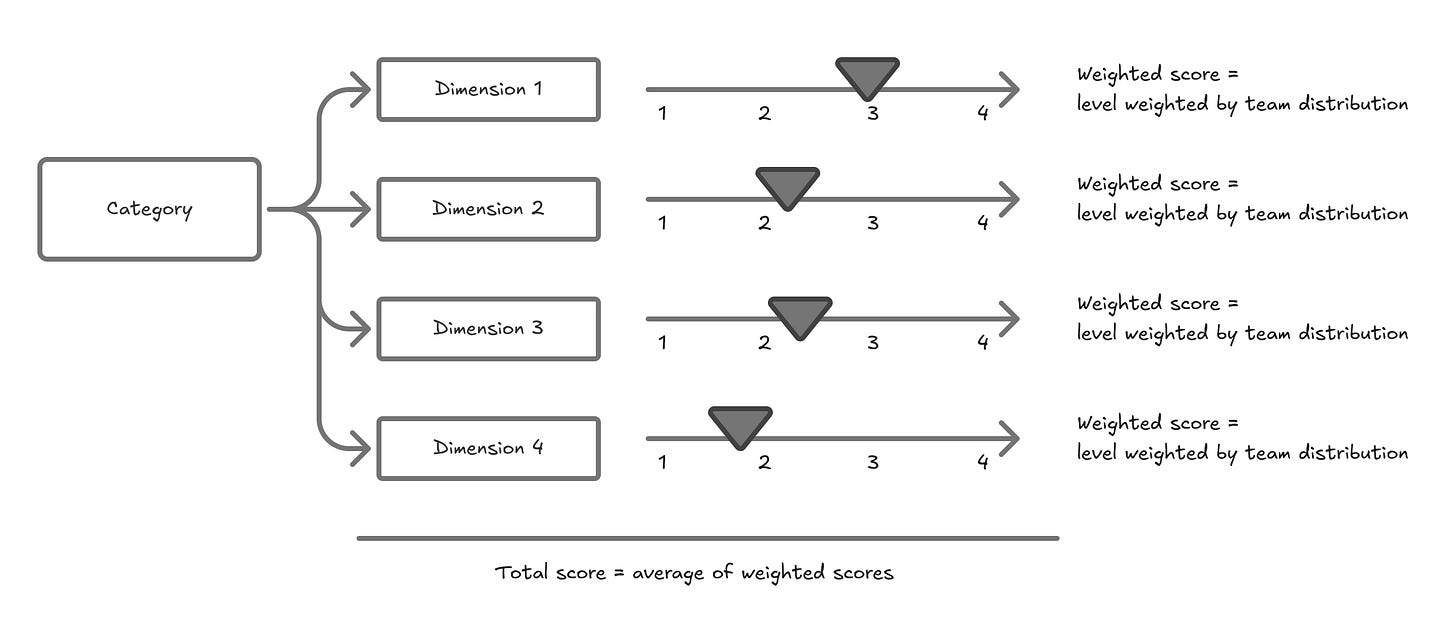

For example, if Claude Code is used for agentic software development (Level 4), but only 10% of the team uses it, while 90% of the team merely uses code completion in VS Code (Level 1), then the weighted average score of the team is 4x0.1 + 1x0.9 = 1.3.

The following diagram illustrates this approach.

Obviously, the Scorecard is not the only way to look at GenAI initiatives. It can be complemented by:

Project management dashboards, to track the progress of each initiative.

Business dashboards, to track the impact of each initiative in terms of additional sales leads, increased productivity, higher customer satisfaction, or any other metric.

Defining scorecard dimensions and levels

Scorecard dimensions and levels must be customized according to the company's business and key processes. The following paragraphs provide a starting point.

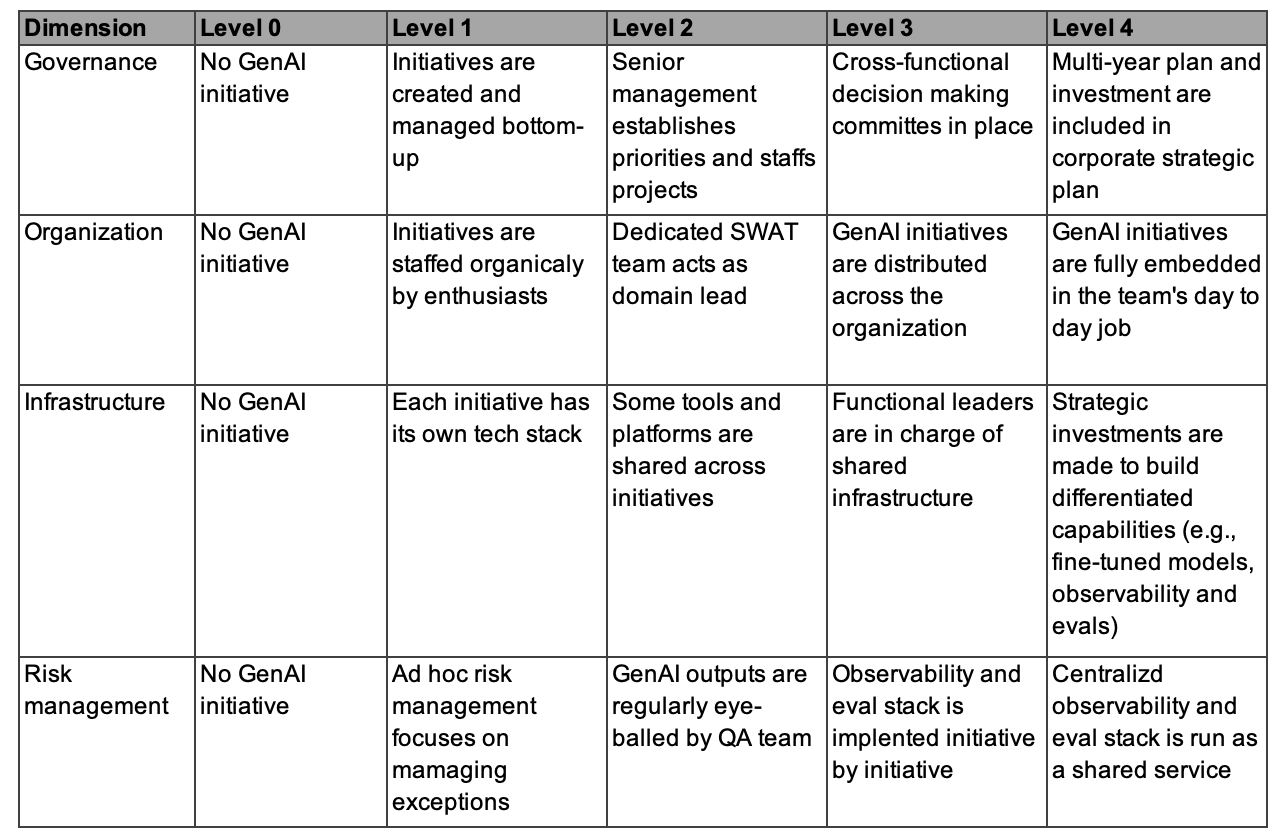

Governance and organization

This area measures the level of adoption of GenAI best practices in terms of governance, organization, infrastructure, and risk management. Below is a possible starting point.

Productivity tools

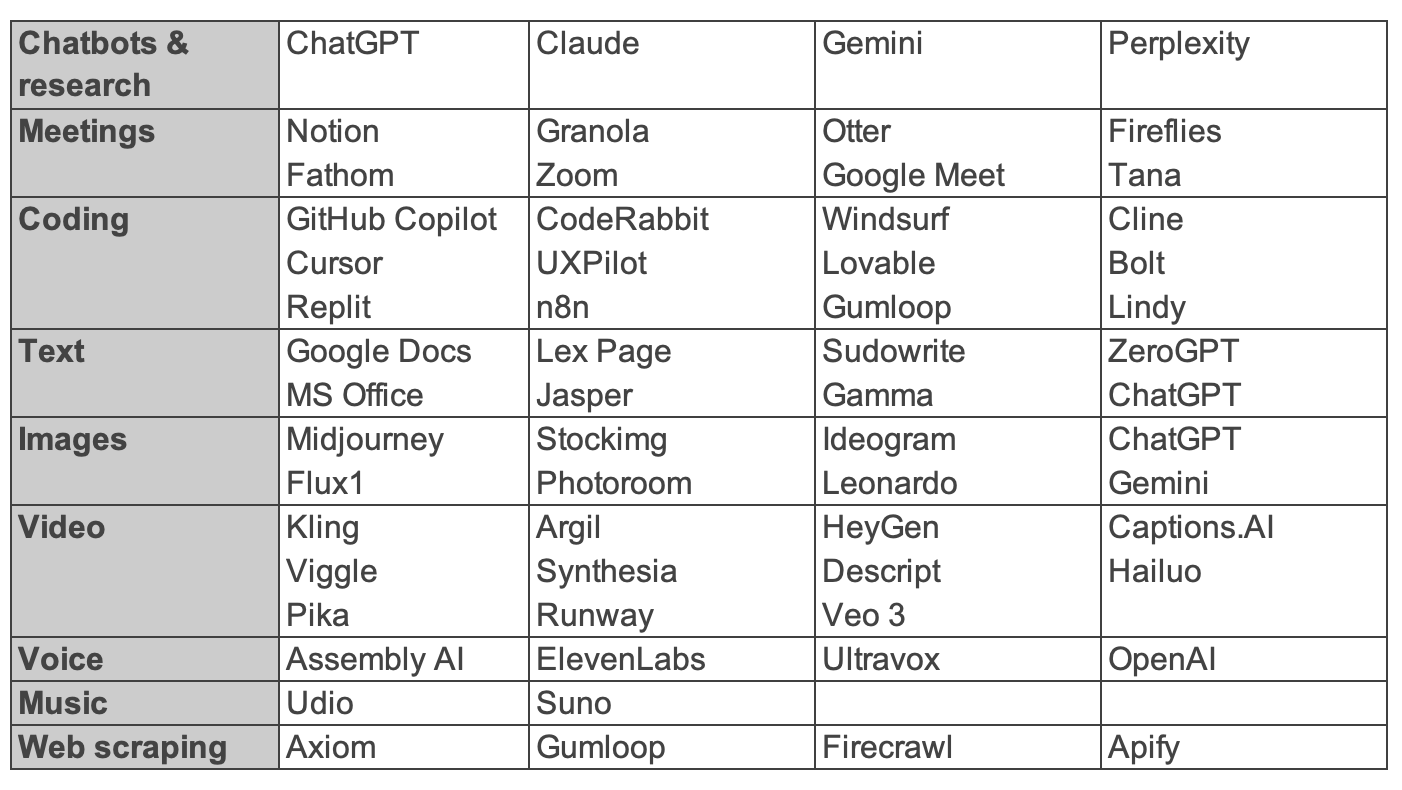

This area measures the level of adoption of commercially available productivity tools, usually provided to the team as per-user subscriptions.

Such tools include personal chatbots, meeting transcription and summarization tools, content generation tools for the marketing & sales team, and coding assistants for the software engineering team. Below are some examples.

Here's a possible starting point for level definition.

Business operations

This area focuses on the application of GenAI for internal process optimization and automation, using either commercially available software integrated into day-to-day workflows, no-code platforms, or custom software. Its dimensions vary by department. For example, for the software engineering department, the dimensions may include:

Specific dimensions vary for the marketing team, the sales team, the finance team, the HR team, and so on.

Customer experience

This area encompasses initiatives that impact customer onboarding, product delivery, and customer support. Its dimensions vary widely by industry and company.

For example:

Level 1 may involve the deployment of a chatbot for customer support (many technology companies, telecoms, and airlines already have this).

Level 2 could entail the deployment of a chat copilot to help users in their actual use of the company's product (e.g., Microsoft Office Copilot).

Level 3 often enables users to easily deploy automations within the product (e.g., Microsoft Azure AI Studio).

Level 4 typically involves redesigning products from the ground up with an AI-first perspective (e.g., how Lovable reinvents the front-end development and deployment process centered on the chat interface, in contrast with how app platforms like Google Cloud App Engine or Digital Ocean start with repositories or Docker images).

Measuring scorecard levels

Once the areas and dimensions are defined, establishing levels and scoring team members usually requires the involvement of experts who have seen GenAI implementations at other companies (particularly tech companies) and can probe the organization through interviews to assess the actual level of GenAI adoption.

The investigation can involve:

Workshops

1:1 interviews

Online team surveys

Regardless of how expert the evaluators are, the first scorecards will incorporate some level of subjective judgment. What's more important than the actual scores is their evolution over time. Quarterly scorecard updates are essential in tracking the organization's progress.

Takeaway messages

Companies need a systematic way to assess whether their AI initiatives are moving the needle beyond isolated pilot projects. The GenAI Maturity Scorecard offers a structured framework for measuring adoption.

The scorecard's value lies in measuring what percentage of teams use AI tools, not just whether the tools exist. Importantly, the scorecard should be updated quarterly to reveal adoption trends.

4 assessment areas can be considered: Governance & Organization (company-wide practices), Productivity Tools (individual and team subscriptions), Business Operations (department-specific process automation), and Customer Experience (external-facing AI applications).

Scorecard dimensions and levels must be tailored to your company's industry, processes, and strategic priorities.